On the relationship between (Enhanced) Universal Dependencies and Phrase Structure Grammar

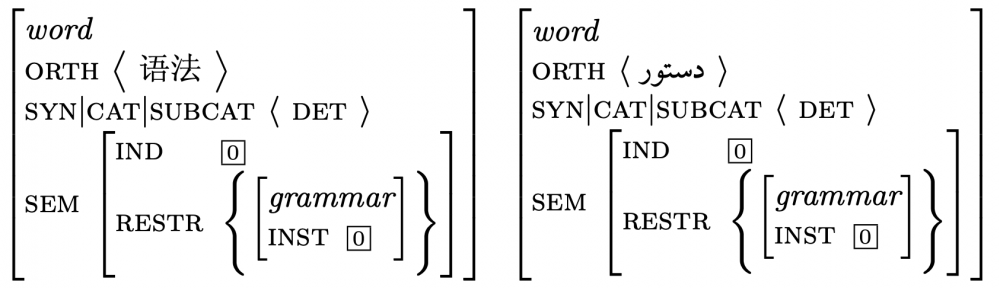

Recent work on neural parsing using pre-trained language models has shown that accurate dependency parsing requires very little supervision, and no induction of grammar rules in the traditional sense. We show that this even holds for dependency annotation that goes beyond labeled edges between word tokens, and that is able to capture much of the same information as that of richer phrase based models such as HPSG. Second, we show that dependency parsing can still benefit from a combination with phrase based syntax, not unlike that of HPSG, and that such combinations might be readily available for many of the treebanks in the UD corpus.